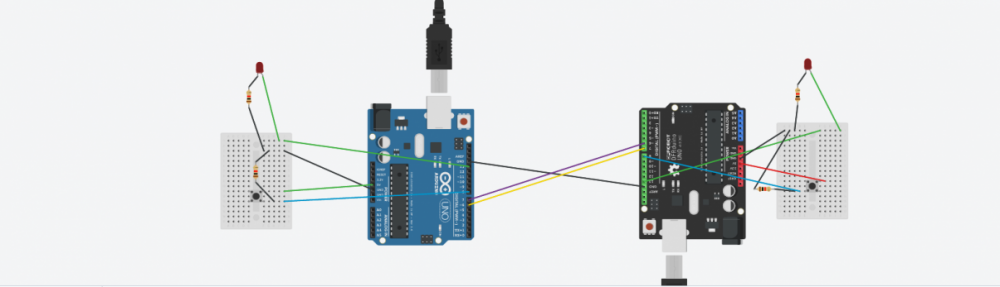

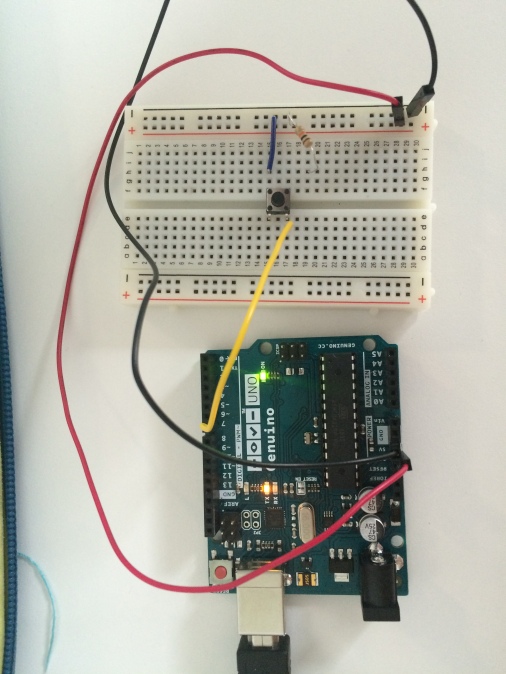

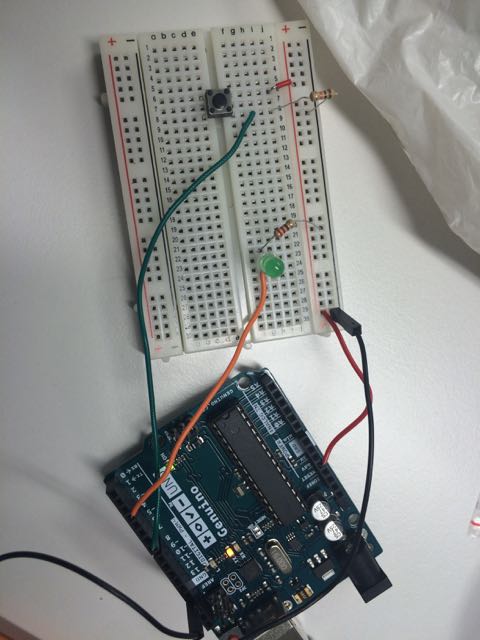

For my final I created a computational and interactive reading of A Thousand Plateaus — a philosophical book written in 1980 by Deleuze and Guattari. The first “plateau” or chapter of the book is entitled “The Rhizome” and lays the framework for the structure of their overall and book and the method of inquiry which they’re proposing. Most fundamental to the rhizome is its anti-linear method, as such the reader is invited to read each plateau in any particular order.

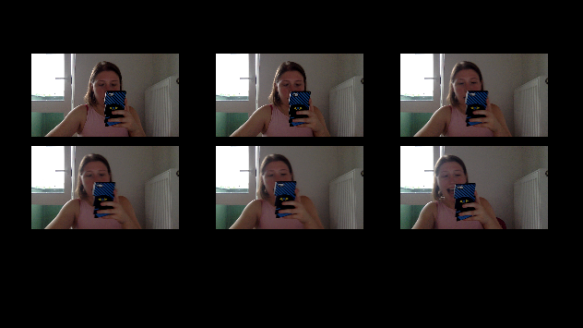

To actualize the rhizomatic ambitions of the book, I created a program which allows users to click into circles/buttons, prompting a paragraph from the chapter will be read out loud to them, at random. The image is the illustration from the chapter within the book–a piano piece written for David Tudor, an early experimental artist and composer, whose work could definitely be said to be rhizomatic.

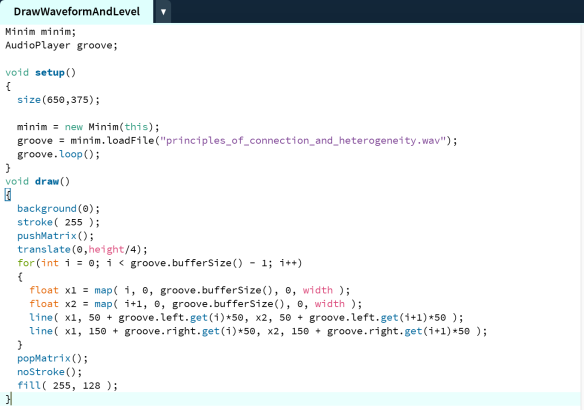

To achieve this randomized effect, I created an array of movies, where each movie was a sound clip of a paragraph being read out loud, and its accompanying sound waves–to further the overall connection to music and composition within the original book. I found the code for the wavelength within the examples from the Minim library, and altered it a little bit to make it fit better in terms of aesthetics into my own project.

Creating an array of movies, to be called at random was the next step. Here, void randomClip was a function I created:

Movie [] mymovies = new Movie [7];

void setup(){

for (int i = 0; i<mymovies.length; i++) {

int tempNum = i+1;

String movieName = tempNum+”.mp4″;

mymovies[i] = new Movie (this, movieName);

}void randomClip() {

//stop everything else

for (int i=0; i<mymovies.length; i++) {

mymovies[i].stop();

}

//randomize a number corresponding to video

int randomNum = floor(random(0, 7));

moviePlayingNow = randomNum;

//call movie to play

mymovies[moviePlayingNow].play();

state = 1;

}

The final step for putting it all together was adding a home prompt screen and then different “states” within the code, to allow the user to switch between the home page, the illustration with the bubbles, and the screen of the wavelength chapter clip. The home screen I added is a quote from Brian Massumi, who translated and wrote the foreword for the most recent translation of A Thousand Plateaus, offering a way to read or here, play, the book:

How should A Thousand Plateaus be played? When you buy a record there are always cuts that leave you cold. You skip them. You don’t approach a record as a closed book that you have to take or leave. Other cuts you may listen to over and over again. They follow you. You find yourself humming them under your breath as you go about your daily business.

The full code for the program can be found below!

import processing.video.*;

Movie [] mymovies = new Movie [7];

PImage img;

PImage img1;

int moviePlayingNow = 0;

int state = 3;

void setup() {

size(650, 375);

background(255, 224, 193);

img1 = loadImage(“new.png”);

img = loadImage(“rhizome.png”);

img1.resize(550, 0);

image(img1, 70, 30);

for (int i = 0; i<mymovies.length; i++) {

int tempNum = i+1;

String movieName = tempNum+”.mp4″;

mymovies[i] = new Movie (this, movieName);

}

}

void draw() {

background(255, 224, 193);

if (state == 3) {

image(img1, 70, 30);

} else if (state == 0) {

startScreen();

} else if (state == 1) {

image(mymovies[moviePlayingNow], 25, 14, 600, 340);

if(mymovies[moviePlayingNow].time()>mymovies[moviePlayingNow].duration()-.1){

state=0;

}

}

println(state);

println(mouseX);

println(mouseY);

}

void mouseClicked() {

if (state==1) {

mymovies[moviePlayingNow].stop();

state = 0;

} else if (state==0) {

//bubble 1

if (mouseX>168 && mouseX<193

&& mouseY>128 && mouseY<161) {

randomClip();

}

//bubble 2

if (mouseX>263 && mouseX<284

&& mouseY>226 && mouseY<243) {

randomClip();

}

//bubble 3

if (mouseX>304 && mouseX<326

&& mouseY>104 && mouseY<126) {

randomClip();

}

//bubble 4

if (mouseX>358 && mouseX<382

&&mouseY>167 && mouseY<190) {

randomClip();

}

//bubble 5

if (mouseX>425 && mouseX<442

&& mouseY>139 && mouseY<157) {

randomClip();

}

//bubble 6

if (mouseX>510 && mouseX<540

&& mouseY>96 && mouseY<124) {

randomClip();

}

//bubble 7

if (mouseX>534 && mouseX<564

&& mouseY>174 && mouseY<200) {

randomClip();

}

}

}

//void mousePressed() {

//}

void keyPressed() {

if (keyPressed && (key == CODED)) {

if (keyCode == RIGHT) {

state = 0;

image(img, 25, 15);

}

}

}

//Play random video from array of videos

void randomClip() {

//stop everything else

for (int i=0; i<mymovies.length; i++) {

mymovies[i].stop();

}

//randomize a number corresponding to video

int randomNum = floor(random(0, 7));

moviePlayingNow = randomNum;

//call movie to play

mymovies[moviePlayingNow].play();

state = 1;

}

//Start screen with quotes to rhizome screen

void startScreen() {

image(img, 25, 15);

}

void movieEvent(Movie m) {

m.read();

}